This work produces the missing region of the image using an existing U-net + partial convolution. Below are some results of the Contextual Attention network. For a more detailed explanation, check out the paper. The paper also proposes the attention visualization to indirectly gauge where the network is paying the most attention to generate the missing region.

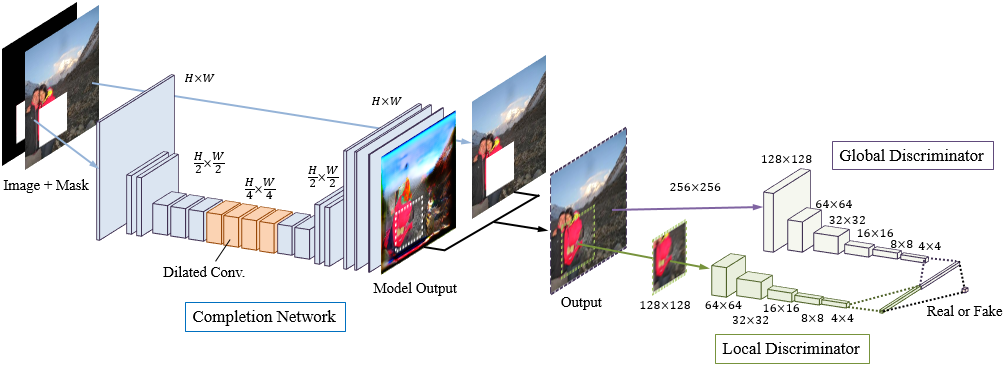

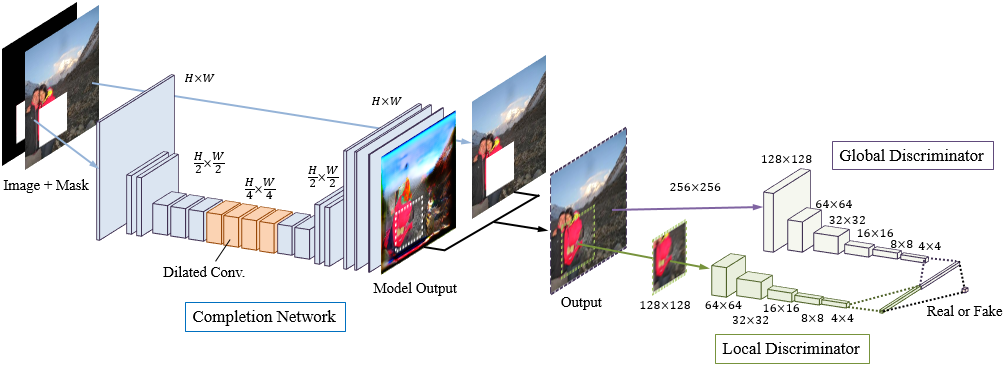

Take the softmax of the output to normalize the contextual attention. Repeat for the mask as well and multiply with the features to send the masked channel to zero. Repeat for all extracted patches and aggregate channel-wise. Take one of the extracted patches and use it as a filter to convolve with the feature itself. The below pictures might aid with the understanding. To calculate the similarity effectively, the authors propose the following method Extract patches + convolution operation + softmax. The motivation behind this module is that when you are reconstructing a missing region, you are going to look at the most similar looking region in other parts of the same image. The contextual layer is a module in the refinement network, and it calculates the similarity within the image. The contextual layer is described in detail below. During the forward process, the path splits after the initial convolution + dilated convolution. Input: composite of the original image and coarse output, mask, 1-mask (5 channel image). The architecture contains two path + concatenation. So weight the loss according to the distance from the border. Missing pixels near the hole boundaries have much less ambiguity than those pixels closer to the center of the hole. So the strong enforcement of the reconstruction loss in those pixels may mislead the training process. The inpainting task can have many plausible solutions for a given context. loss: spatially discounted L1 loss (reconstruction loss). input: input with hole, mask, 1-mask (5 channel image). architecture: convolution + dilated convolution + upsampling + convolution. The architecture is described as Coarse to Fine Network. This paper uses a two-stage network and a contextual attention module to improve the quality of the generated images.

Take the softmax of the output to normalize the contextual attention. Repeat for the mask as well and multiply with the features to send the masked channel to zero. Repeat for all extracted patches and aggregate channel-wise. Take one of the extracted patches and use it as a filter to convolve with the feature itself. The below pictures might aid with the understanding. To calculate the similarity effectively, the authors propose the following method Extract patches + convolution operation + softmax. The motivation behind this module is that when you are reconstructing a missing region, you are going to look at the most similar looking region in other parts of the same image. The contextual layer is a module in the refinement network, and it calculates the similarity within the image. The contextual layer is described in detail below. During the forward process, the path splits after the initial convolution + dilated convolution. Input: composite of the original image and coarse output, mask, 1-mask (5 channel image). The architecture contains two path + concatenation. So weight the loss according to the distance from the border. Missing pixels near the hole boundaries have much less ambiguity than those pixels closer to the center of the hole. So the strong enforcement of the reconstruction loss in those pixels may mislead the training process. The inpainting task can have many plausible solutions for a given context. loss: spatially discounted L1 loss (reconstruction loss). input: input with hole, mask, 1-mask (5 channel image). architecture: convolution + dilated convolution + upsampling + convolution. The architecture is described as Coarse to Fine Network. This paper uses a two-stage network and a contextual attention module to improve the quality of the generated images. #IMAGE INPAINT GENERATOR#

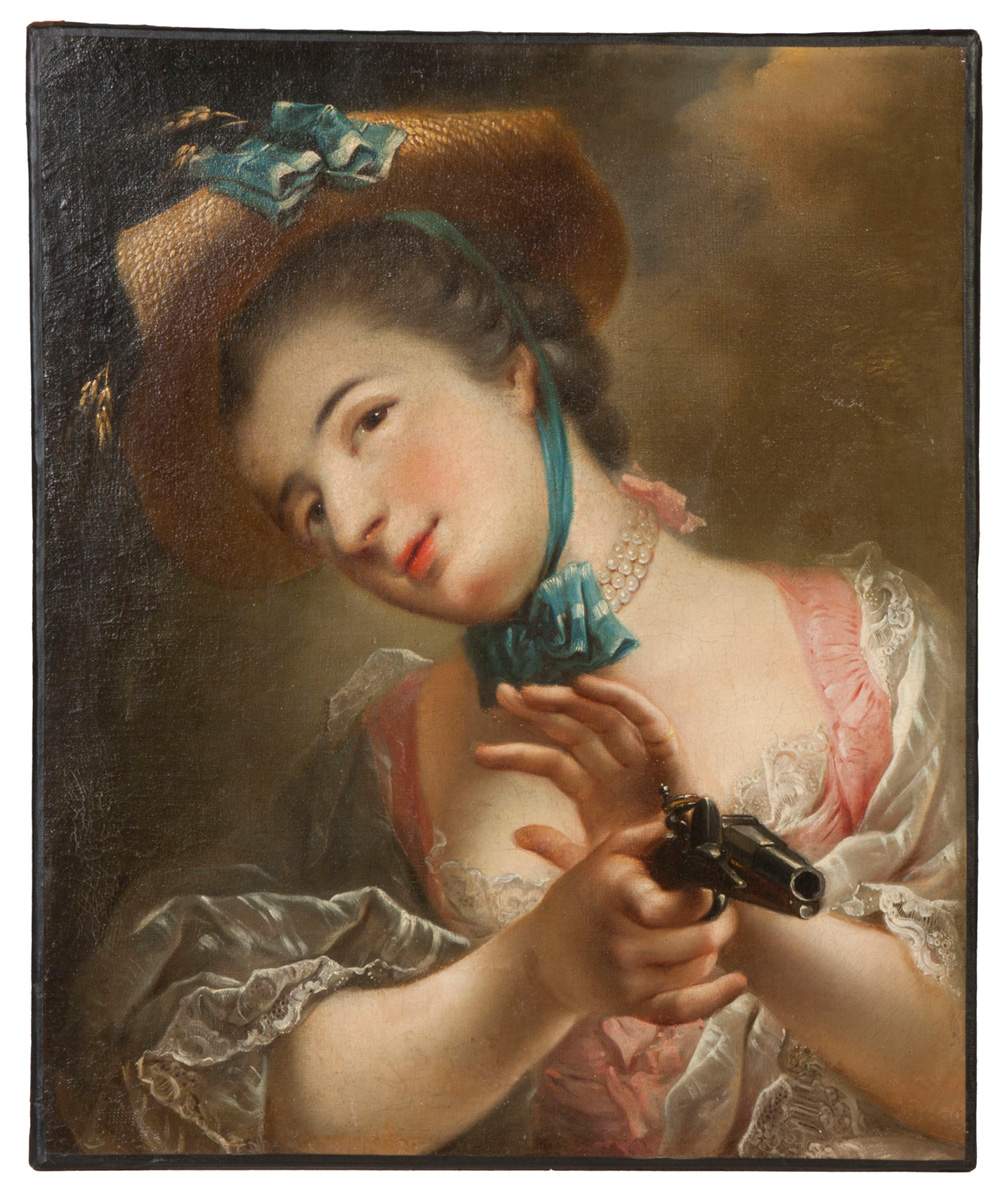

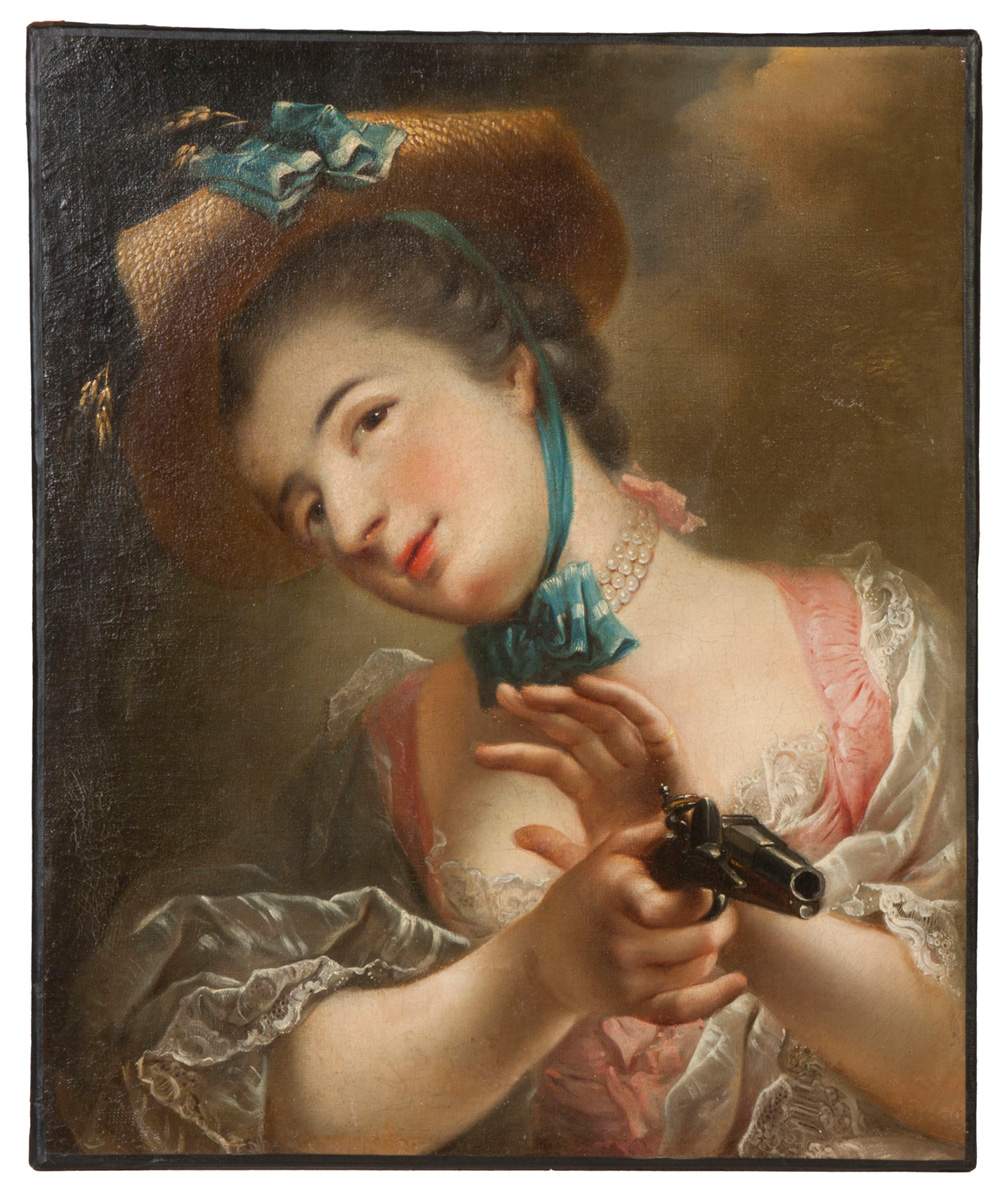

The adversarial loss is given so that the generator produces the image that tries to make it hard for the discriminator to distinguish between the generated and real images.īelow image shows a result from the paper, and as you can see, the quality of the produced image could be made better.įurthermore, the architecture depends on the missing region’s shape, which could be inconvenient in a real-world application.

The reconstruction loss is an l2 loss between ground truth image and the produced image. To train the network, the authors suggested using the following losses. The network will be trained to match the ground truth of the missing region. The decoder produces the missing image content. The encoder produces a latent feature representation of that image. The input is a picture with a missing region in the center. This work’s main idea is to generate the missing part of the image using the Encoder Decoder structure trained on the adversarial loss.

Image Inpainting for Irregular Holes Using Partial Convolutions (2018). Generative Image Inpainting with Contextual Attention (2018). Context Encoders: Feature Learning by Inpainting (2016). In this post, we would like to cover 3 papers to get a glimpse of how the field has evolved.

Check the generated image from the paper G enerative Image Inpainting with Contextual Attention (2018). The goal of this task is to generate the image looking as realistic as possible. Image inpainting is a field of research where you are filling in the missing area within a picture. In this post, we would like to give a review of selected papers on “Image Inpainting”.

0 kommentar(er)

0 kommentar(er)